Video accessibility

High-quality recorded video with audio benefits everyone.

Two types of video

- Prerecorded video with audio

- A closed caption file (.WebVTT or .SRT formats) must be provided along with the video.

- Videos can be uploaded to the YuJa Enterprise Video platform - they are auto-captioned, and you can link to them within D2L.

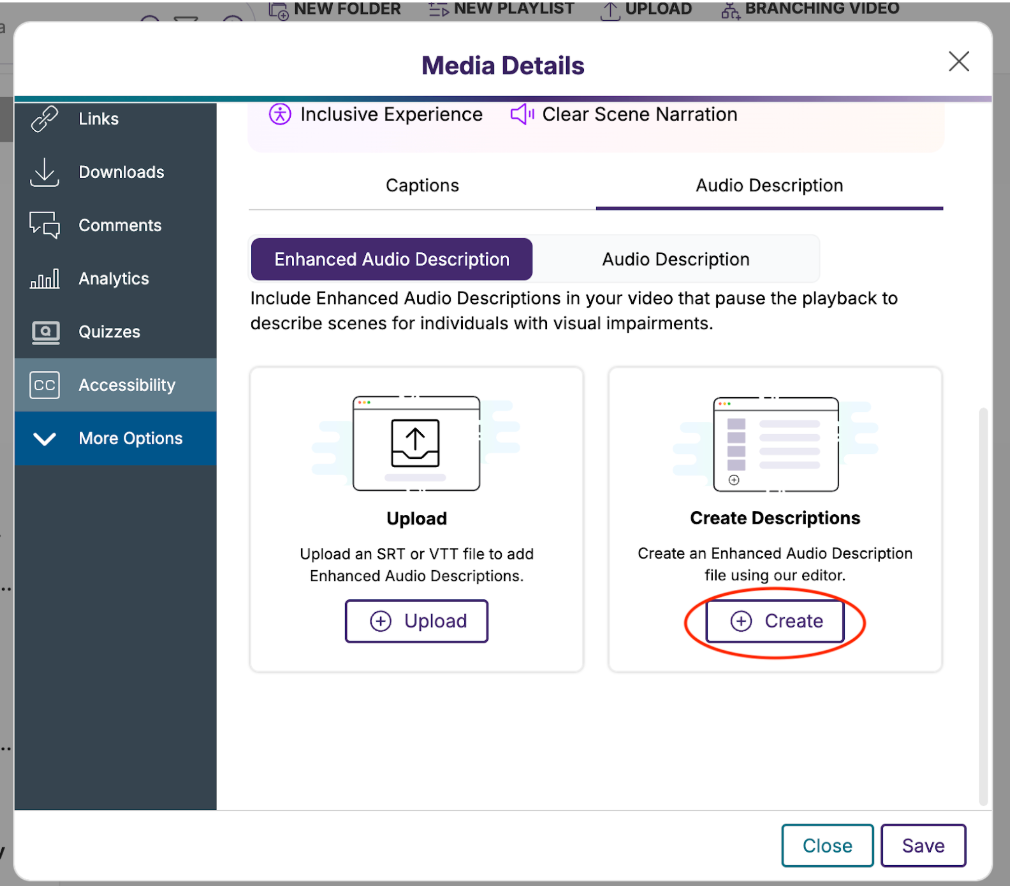

- If the captions do not describe key visual information, then audio descriptions would be needed.

- Write these to describe that key visual information and manually add audio descriptions via the YuJa video platform.

- Live video with audio streaming

- Provide live captions for live video streaming events (called CART services: Communication Access Realtime Translation).

- A sign-language interpreter can also be considered.

- After the event, the recorded video can be uploaded to YuJa Enterprise Video platform.

- The video will be auto-captioned. Check for accuracy.

About captions and transcripts

Why are these important?

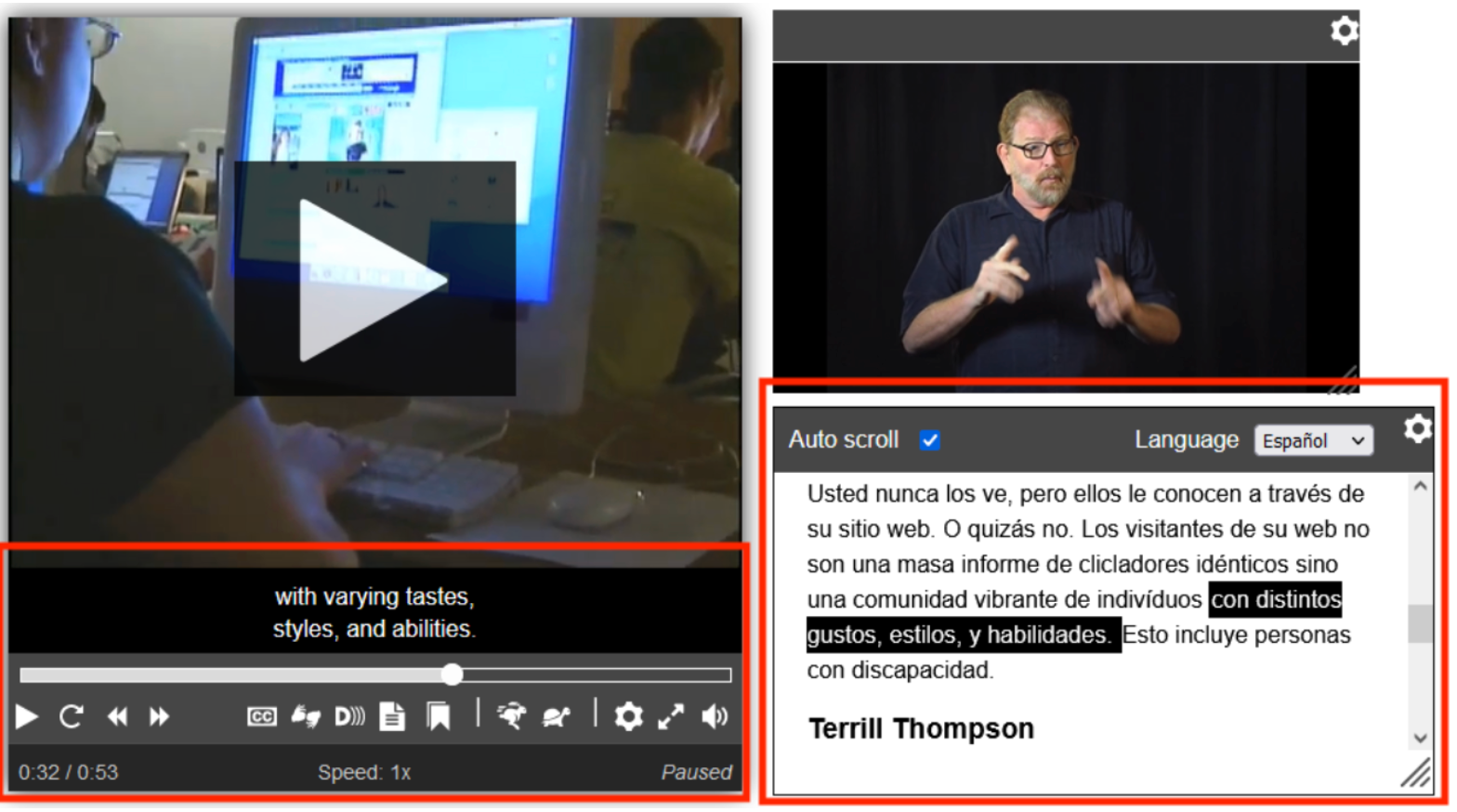

- Vital for people with hearing difficulties or deafness. They provide access to dialogue and explain non-speech sounds like music and laughter that occur in the media.

- Also help identify speakers, overcome audio challenges like background noise or distinguishing people’s accents, and help to ensure overall access to media content.

Captions

- There are two main types:

- Closed Captions (CC) can be turned on or off in a media player when provided with the video.

- Open Captions (OC) are permanently embedded into a video.

- Captions must be accurate and properly synchronized with the video and audio.

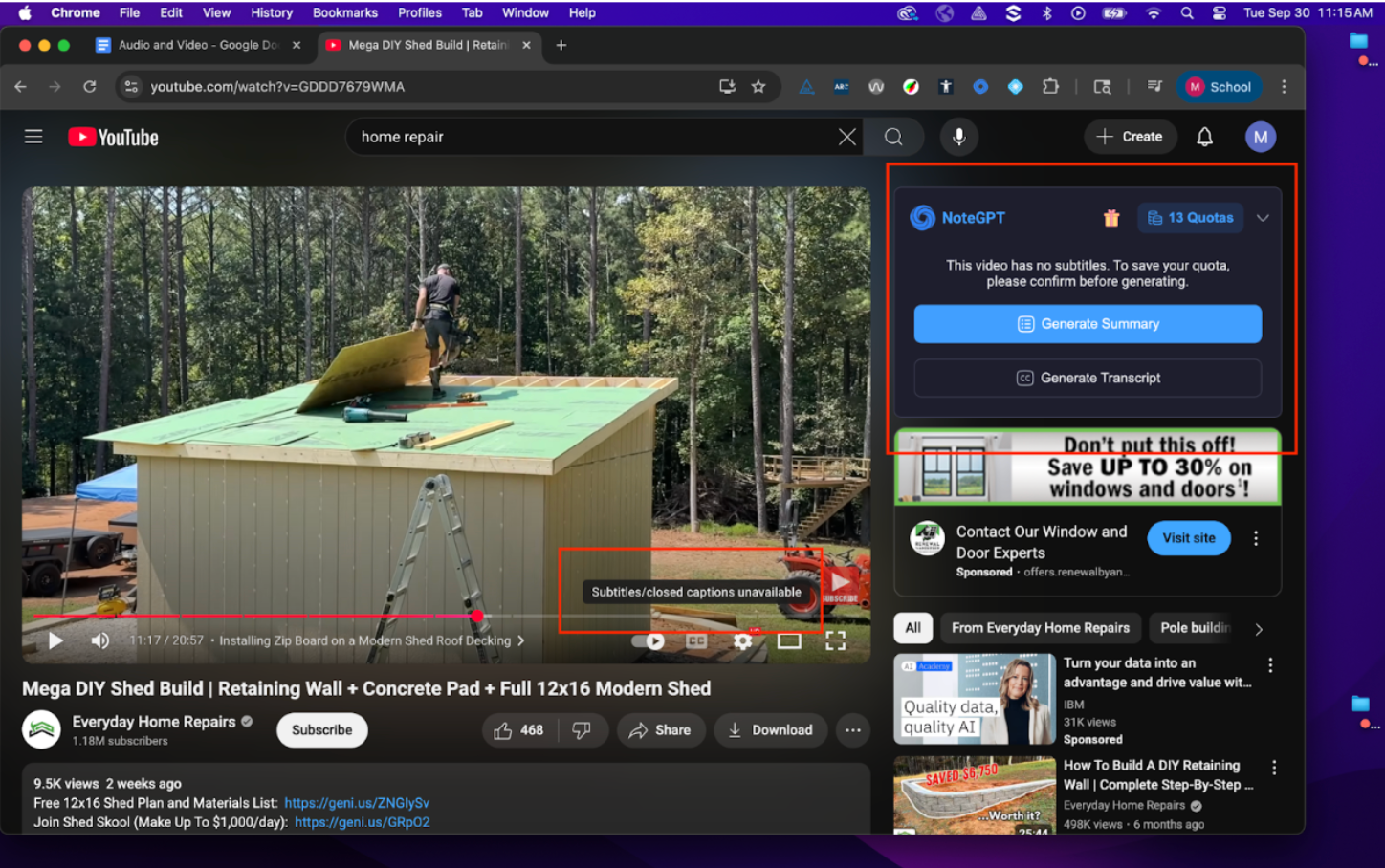

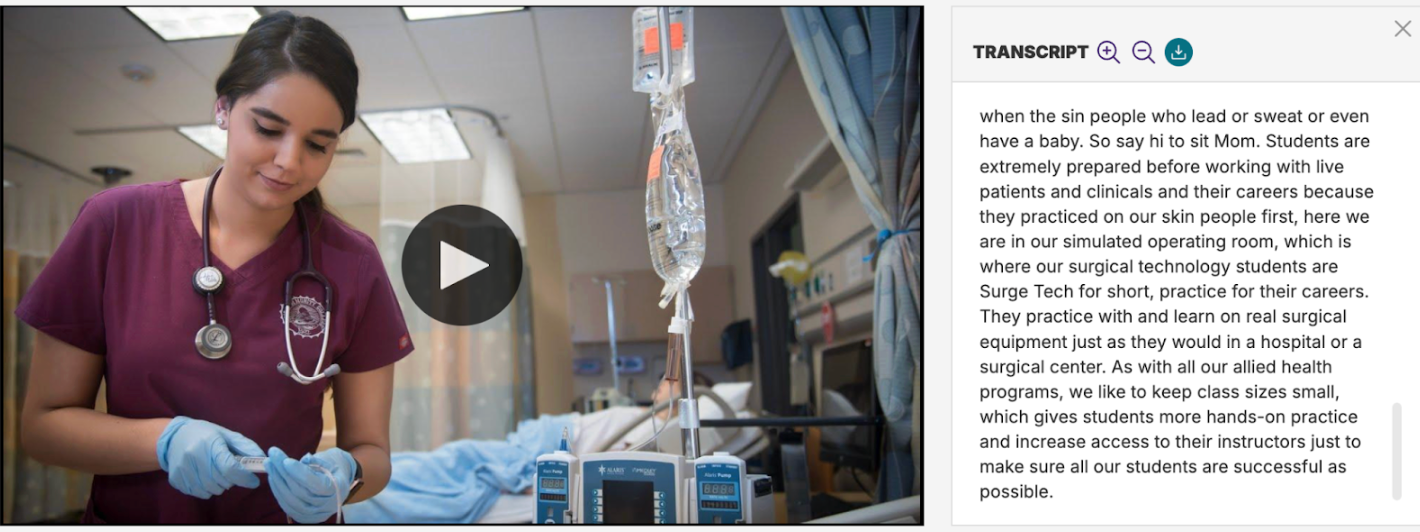

- Inaccurate captions can lead to miscomprehension of video content, especially with complex or specialized terminology like math and science equations, formulas, etc.

- Should include all dialogue, crucial sounds, and meaningful non-speech information like music.

- Captions need to clearly identify a speaker, especially when there are multiple speakers.

- Help to improve comprehension for individuals with cognitive impairments, and they can enhance focus, boost retention, and support diverse learning styles.

- Captions are different from subtitles:

Subtitles are used for language translation and it is assumed the user can hear the audio but may not understand the language, so they typically only translate dialogue.

More about captions

- If a video is hosted on the YuJa Enterprise Video platform and embedded into D2L, then Panorama in D2L would check for the presence of captions.

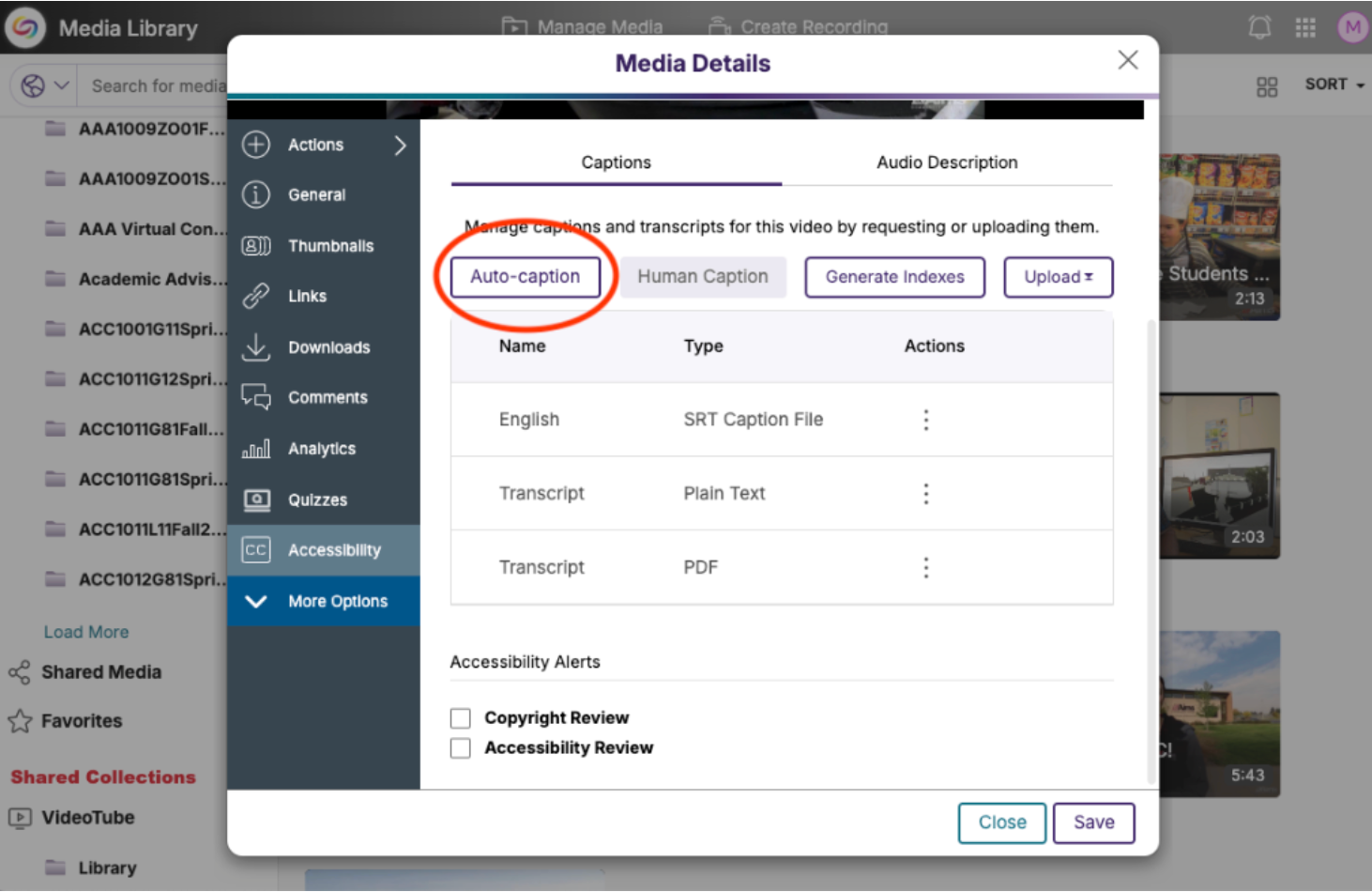

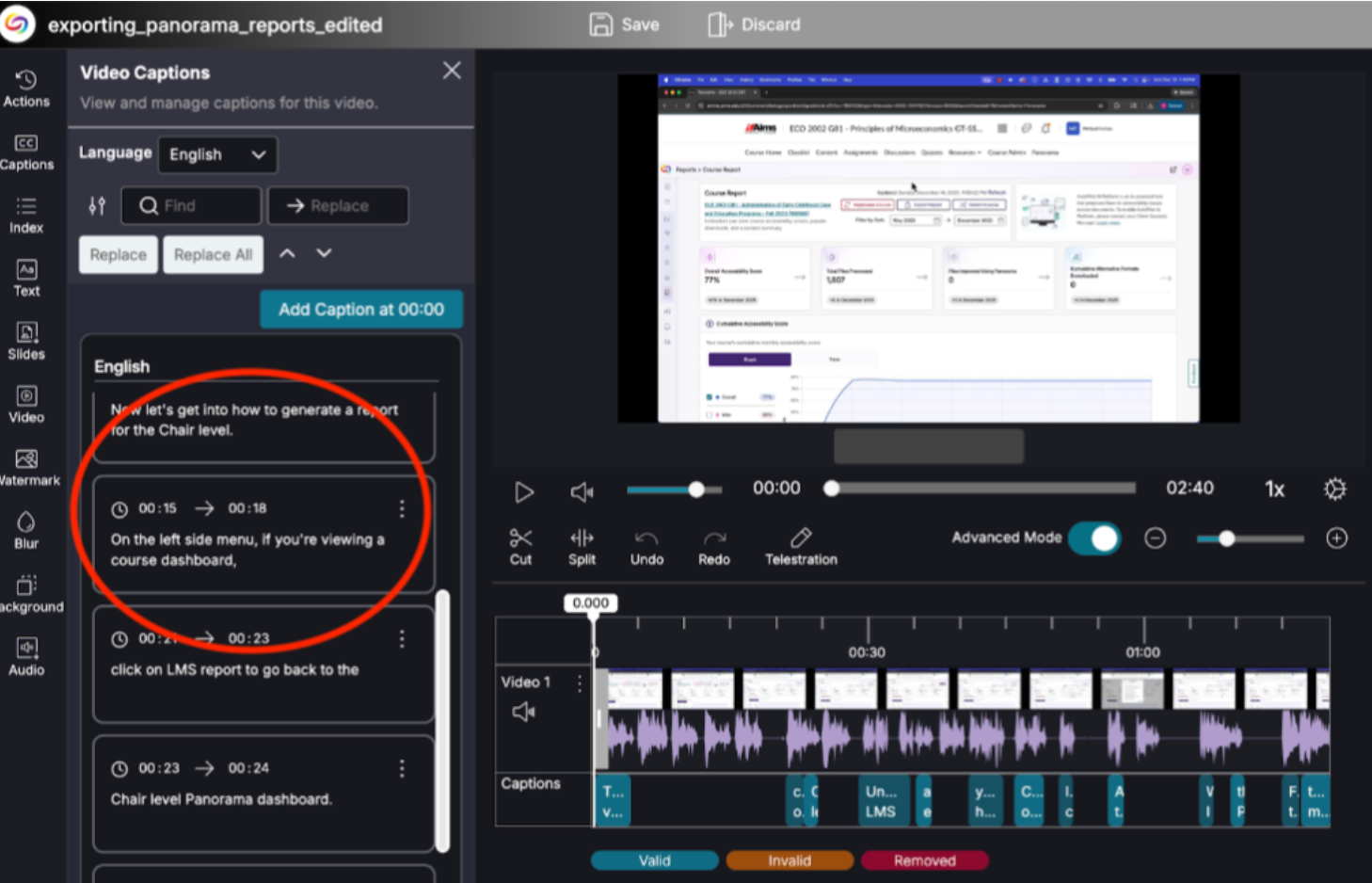

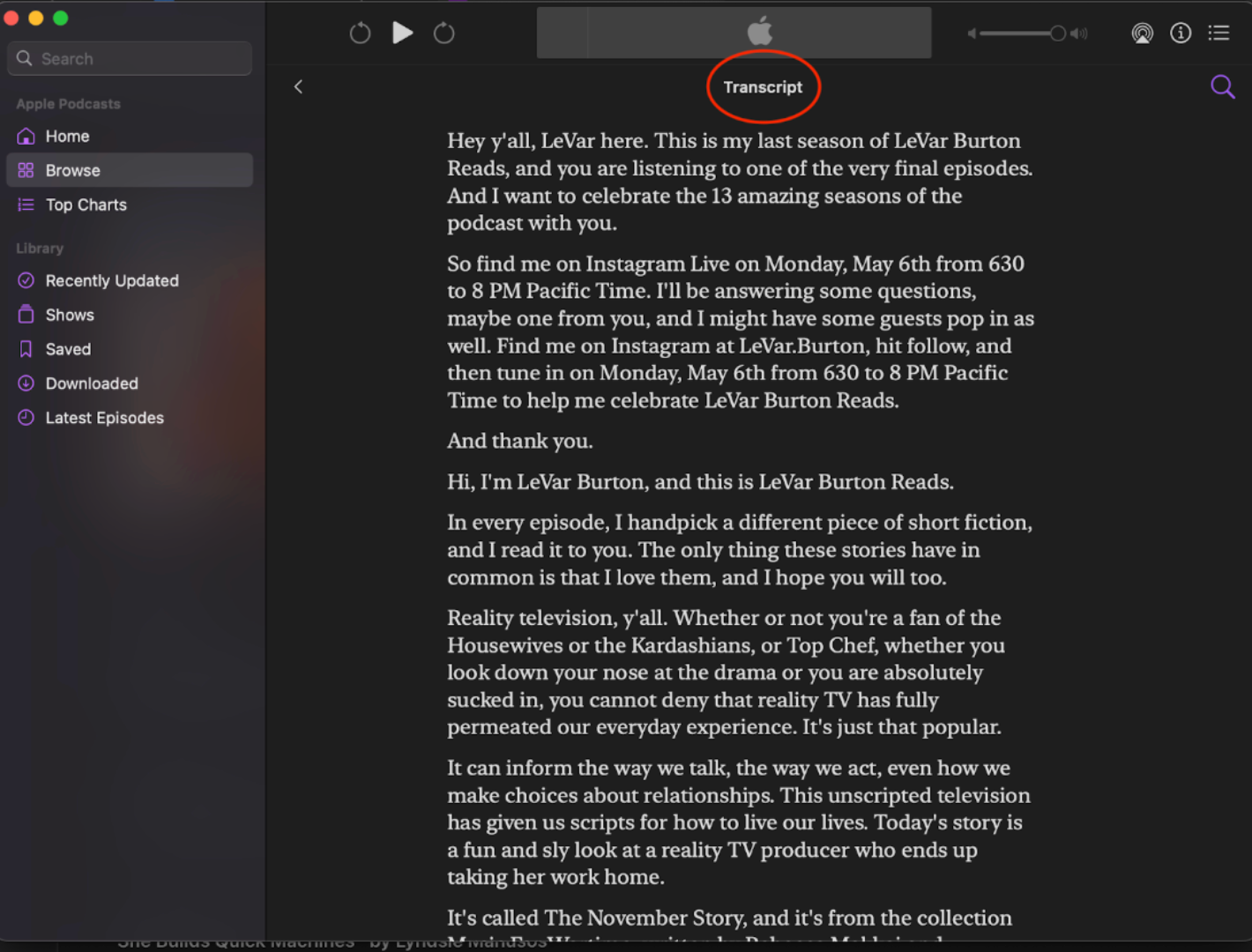

- When uploading a video to the YuJa video platform it automatically captions it. The first screen shot below shows Media Details > Accessibility page. The second screen shot shows using the Video Editor to edit video captions for accuracy, spelling errors, and context.